WalrusDB uses S3 Express One Zone as the durable persistence layer for the Write-Ahead Log (WAL). Writing to s3 for every transaction write incurs high latency and transactional costs.

To solve this, we can implement an in-memory buffer that temporarily stores WAL writes and then syncs them to S3 asynchronously in batches.

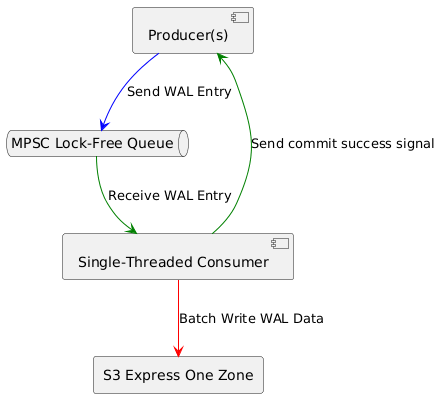

Overall design

The design uses a lock-free, concurrent queue (ArrayQueue) to buffer WAL entries in memory. Producers push new WAL entries to this queue. To allow producers to wait for persistence, each entry includes a channel sender (oneshot::Sender) used to signal completion. A single consumer background task continuously reads from the queue, serializes all pending transactions into a single batch, and writes the batch to S3. Once the batch is successfully persisted, the consumer notifies the respective producers via the channel sender in each WAL entry.

WAL Entry

Each entry in the WAL buffer contains the key, the value, and oneshot::Sender for notifying the producer that the entry has been synced to s3.

pub struct WalEntry {

pub key: Key,

pub value: Value,

pub async_waiter: Option<oneshot::Sender<()>>,

}

Producer

The WalProducer provides interface for appending new entries to the shared buffer. The buffer itself is an ArrayQueue from the crossbeam-queue crate, which is a lock-free MPMC.

pub struct WalProducer {

ring_buffer: Arc<ArrayQueue<WalEntry>>,

}

impl WalProducer {

pub fn append(&self, entry: WalEntry) -> Result<(), WalEntry> {

self.ring_buffer.push(entry)

}

}

Consumer

The WalConsumer runs in a dedicated Tokio task. It wakes up periodically at flush interval, drains all entries from the queue into a batch, and writes the batch to S3. After a successful write, it notifies the respective producers via async_waiter.

pub struct WalConsumer {

ring_buffer: Arc<ArrayQueue<WalEntry>>,

flush_interval: Duration

}

impl WalConsumer {

pub async fn start(&mut self) {

loop {

tokio::time::sleep(self.flush_interval).await;

let mut wal_batch = Vec::new();

while let Some(wal_entry) = self.ring_buffer.pop() {

wal_batch.push(wal_entry);

}

if wal_batch.is_empty() {

continue;

}

// ... Sync wal_batch to s3

// Notify the producer that the entry has been persisted.

for entry in wal_batch {

if let Some(waiter) = entry.async_waiter {

let _ = waiter.send(());

}

}

}

}

}

Further improvements

-

MPSC Buffer: Current implementation uses MPMC buffer which would have higher syncronization overhead vs MPSC buffer, migration to MPSC buffer should improve performance.

-

Buffer Threshold Flushing: To improve latency consumer can also be notified to wake up and flush the buffer immediately when it reaches a predefined size or capacity threshold.

-

Batch Size Limiting: Large transaction batches may cause significant latency spikes for producers waiting for acknowledgement. Can break down large batches into smaller sub-batches before syncing them to S3.

You can Follow Development on WalrusDB on Github