What?

Kafka is a distributed event/message streaming software. Kafka communicates events from one system to another.

Features

- Horizontally Scalable

- High Throughput

- Ability to re-consume old messages

- No Global ordering of messages, partial ordering in partitions

- At-Least once delivery guarantee

- Supports both the point-to point delivery model in which multiple consumers jointly consume a single copy of all messages in a topic, as well as the publish/subscribe model in which multiple consumers each retrieve its own copy of a topic

- Efficient Transfer: Batched api allows producers and consumers to send and receive multiple messages in one round trip

Why?

LinkedIn needed to use data generated such as user activities, likes, page views, comments to in real time to power functionalities such as ads, user feed, search rankings. Requirements:

- High throughput: need to efficiently store and distribute large number of messages.

- Weak Delivery guarantee: it is okay: missing few messages is okay (not a kafka feature)

- First class Distributed system support: Partition and store messages on different machines

- Performant: Performance should not degrade if amount of events stored is increases.

- Consumer Pull Model: Consumers(one or many) of events should decide at what rate they want to consume messages and not get flooded.

None of the existing systems satisfied all these requirements

How?

Kafka is built as cross between messaging system and log storage system.

Concepts

- Topics: Logical group of events.

- Producer: System publishing events to a topic

- Consumer: System consuming events from a topic

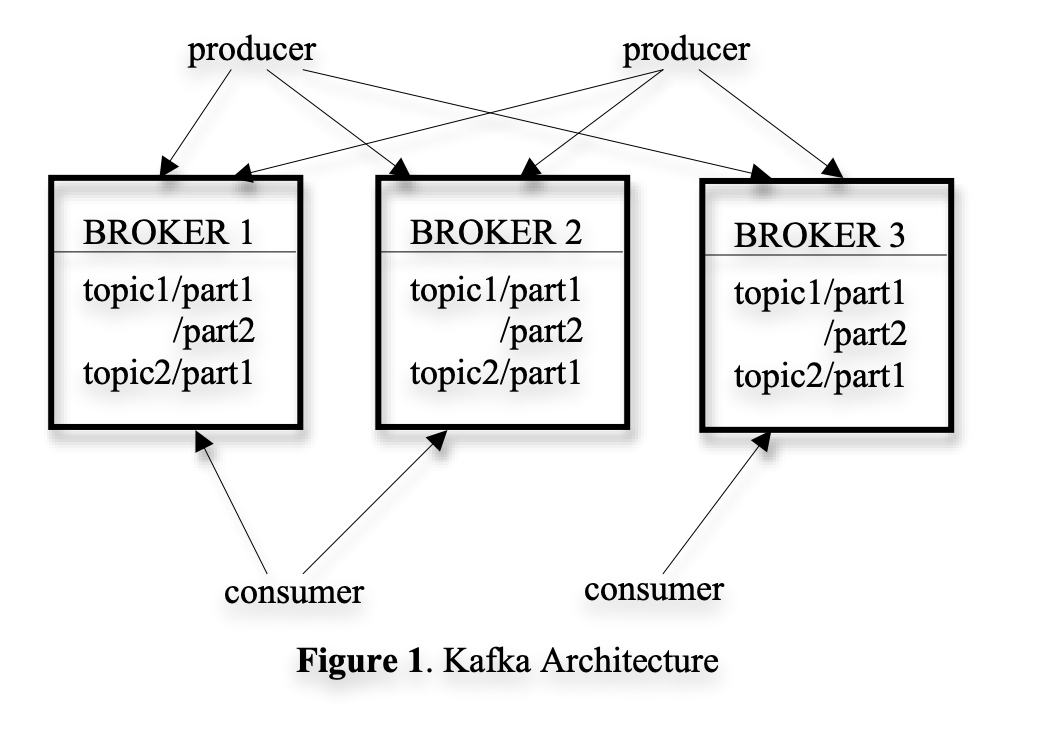

- Brokers: Servers where events of topics are stored. Producers push events here and consumers pull from here.

- Partitions: To balance load, a topic is divided into multiple partitions and each broker stores one or more of those partitions.

- Consumer Groups: Collection of consumers consuming from same topic, and jointly consume a single copy of all messages in a topic.

Architecture:

Storage

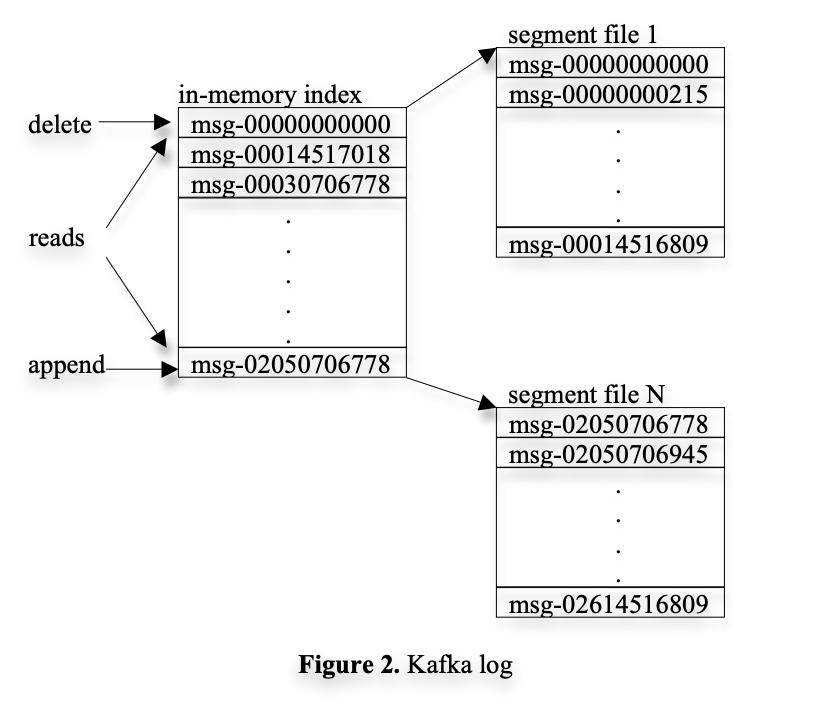

Kafka has a very simple storage layout. Each partition of a topic corresponds to a logical log. Physically, a log is implemented as a set of segment files of approximately the same size (e.g. 1GB). Every time a producer publishes a message to a partition, the broker simply appends the message to the last segment file.

Each message is addressed by its logical offset in the log(id and offset is used interchangeably). This avoids the overhead of maintaining seek-intensive random-access index structures that map the message ids to the actual message locations. Message ids or offsets are increasing but not consecutive. To compute the id of the next message, we have to add the length of the current message to its id.

Messages are generally consumed sequentially, consumers tell broker from what messageId in a partition they want to start consuming from. To find segment file containing requested message each broker keeps in memory a mapping of sorted offsets to segment file.

Kafka also keeps a separate index file for each segment file which contains messageId to file relative offset mapping, to find a message easily in segment file. This index file does not store all ids but ids at regular byte interval, controlled by log.index.interval.bytes and index.interval.bytes properties of broker.

Distributed Coordination

Producers: Producers are free to publish messages into any partition they want. Partitioned are generally load balanced by calculating partition on client side using consistent hash of message property. There is not much coordination needed for producers.

Consumers: In publish subscribe model where multiple consumer receives own copy of topic, messages coordination as not necessary as each consumer is independently consuming messages from topic and are part of different consumer groups .

But in point-to point delivery model, where multiple consumers (belonging to same consumer group) jointly consume a single copy of all messages in topic, we need to coordinate among consumers. For consumers in particular consumer group, each message of subscribed topic is delivered to only one of the consumer within the group. We need to divide the messages stored in the brokers evenly among the consumers.

In Kafka a partition within a topic the smallest unit of parallelism, to horizontally scale a topic we increase number of partitions in it (and subsequently the number of brokers). To coordinate among consumers one partition is assigned to only one consumer within the group, although consumers can have one or more partitions assigned. All partitions of a topic are distributed among consumers in Consumer group.

This assignment of partitions to consumers is coordinated by zookeeper. When new consumers/brokers are added/removed(crashed) a rebalance is triggered, which redistributes and reassigns partitions among consumers.

Offset Storage

Brokers are themselves stateless and does not store which offset consumer is at. Instead zookeeper stores offset against each partition for a consumer group. When a consumer successfully acks a message its partition and offset gets stored in zookeeper. In case of rebalance of partitions, new consumer of partition will gets last acked offset from zookeeper and ask broker for messages from that offset.

And?

Kafka is high performance system and has led to evolution of new patterns in architecture of distributed systems. It allows Producers and consumers to be decoupled, producer does not command consumer on what action to perform with the message. Producer simply notifies of events happening and any downstream system can choose to act on its own or not act at all.

Kafka has also enabled abilities like cross region replications, data sync across heterogeneous systems (eg. Cassandra to elasticsearch), real time analytics dashboard.

Kafka is in working to remove Zookeeper as dependency and in implement Raft quorum to store metadata rather than using ZooKeeper. KIP-500

Links: Kafka Paper Website